Invest. Support. Grow.

Nueterra Capital was founded to empower entrepreneurs — to equip innovative minds with the capital, resources and expertise to help drive change. We provide financial investment as well as access to a network of resources to support our portfolio companies and create wealth for our investors.

Mission

Innovation is Our Bottom Line

Nueterra Capital invests in early and growth-stage companies that are focused on changing the status quo. We equip innovative minds with the capital, resources, and expertise to catapult their growth, create wealth for our investors, and help drive transformation across a range of industries.

Investment Strategy

We Capitalize on Change

Our investment strategy is focused on identifying untapped opportunities for wealth creation. We believe the types of problems businesses and consumers face are changing, and with that change comes significant opportunities for companies that can innovate quickly. We invest in companies that embrace change as a means of economic growth and demonstrate a commitment to their vision.

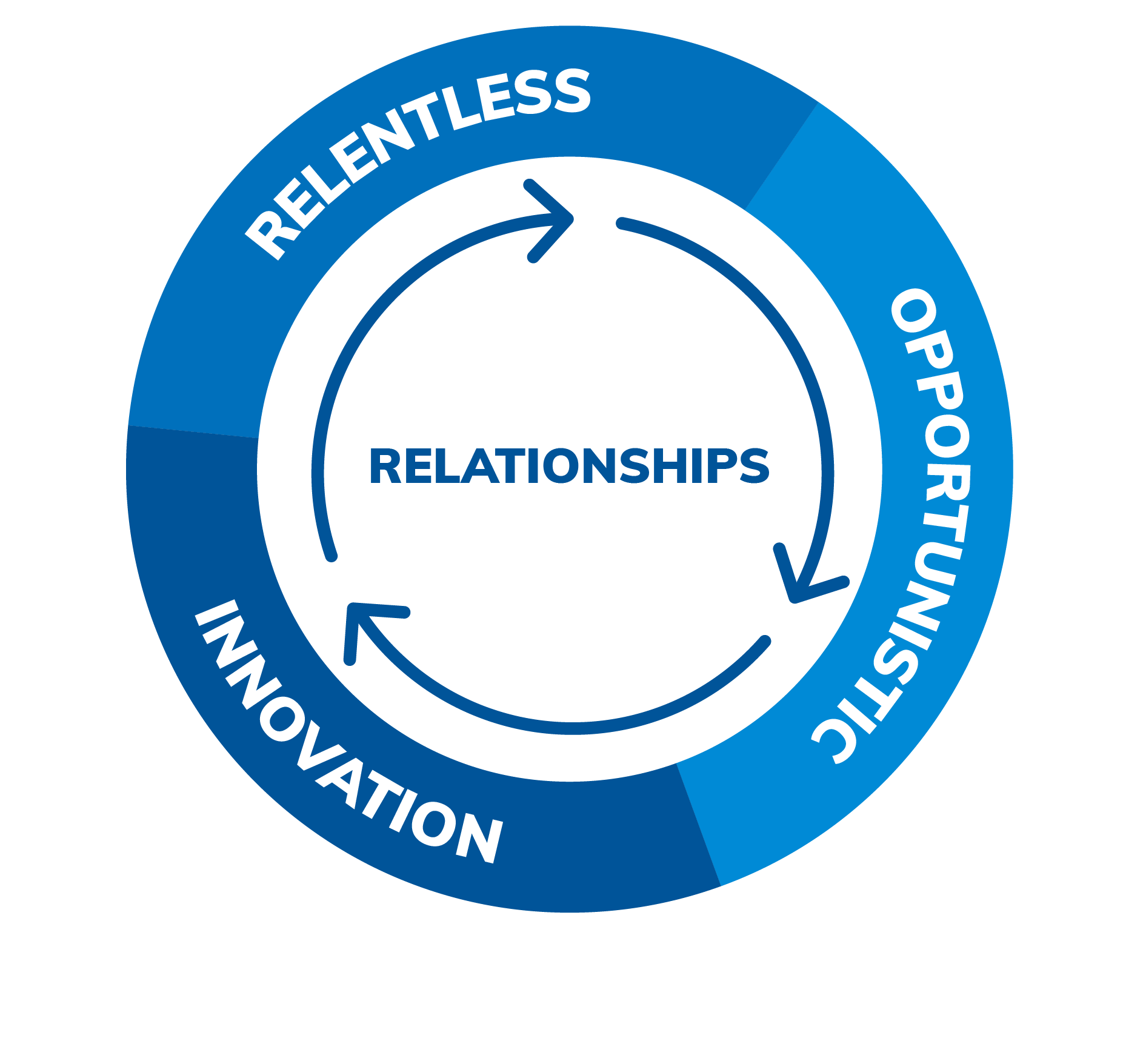

Our Values

Our Team

The Nueterra Capital team is comprised of experienced entrepreneurs and finance professionals who understand what it’s like to be a founder – and how to capitalize on that experience to create wealth for our investors.

Dan Tasset

Chairman

Jeremy Tasset

Chief Executive Officer

Mark Jones

Chief Financial Officer

Emmanuel Emah-Emeni

Managing Director

Travis Tasset

Senior Vice President